DeepAmandine

This is an artificial intelligence based on GPT-3 that you can chat with, it is very nice and makes a lot of jokes. We wish you a good experience with the AGI and hope you have fun.

Installation and usage

- To use the version on Android - v1.0-beta :

1. Installing the pre-required Python libraries :

$ wget https://github.com/BuyWithCrypto/deep-amandine/releases/download/v1.0-beta/requirements.txt && pip3 install -r requirements.txt

2. Download the executable file :

$ wget https://github.com/BuyWithCrypto/deep-amandine/releases/download/v1.0-beta/DeepAmandine-android-v1.0-beta.pyc

3. Run the executable :

$ python3 DeepAmandine-android-v1.0-beta.pyc

- To use the version on Desktop - v1.0-beta :

1. Installing the pre-required Python libraries :

$ wget https://github.com/BuyWithCrypto/deep-amandine/releases/download/v1.0-beta/requirements.txt && pip3 install -r requirements.txt

2. Download the executable file :

$ wget https://github.com/BuyWithCrypto/deep-amandine/releases/download/v1.0-beta/DeepAmandine-desktop-v1.0-beta.pyc

3. Run the executable :

$ python3 DeepAmandine-desktop-v1.0-beta.pyc

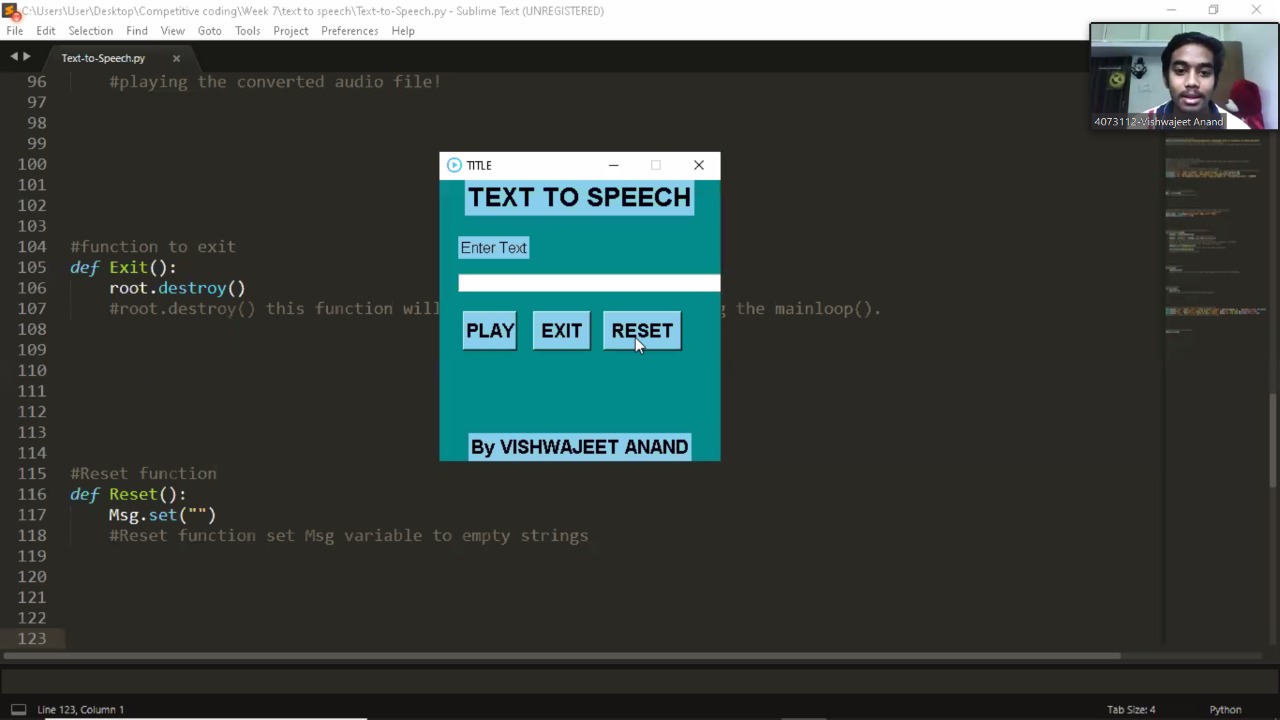

Examples of use

You can select a language to speak with our AI.

You can select a username to chat with the AI.

Once all the steps have been completed, you can start talking to the AI.