Apache MXNet (incubating) for Deep Learning

| Master | Docs | License |

|---|---|---|

|

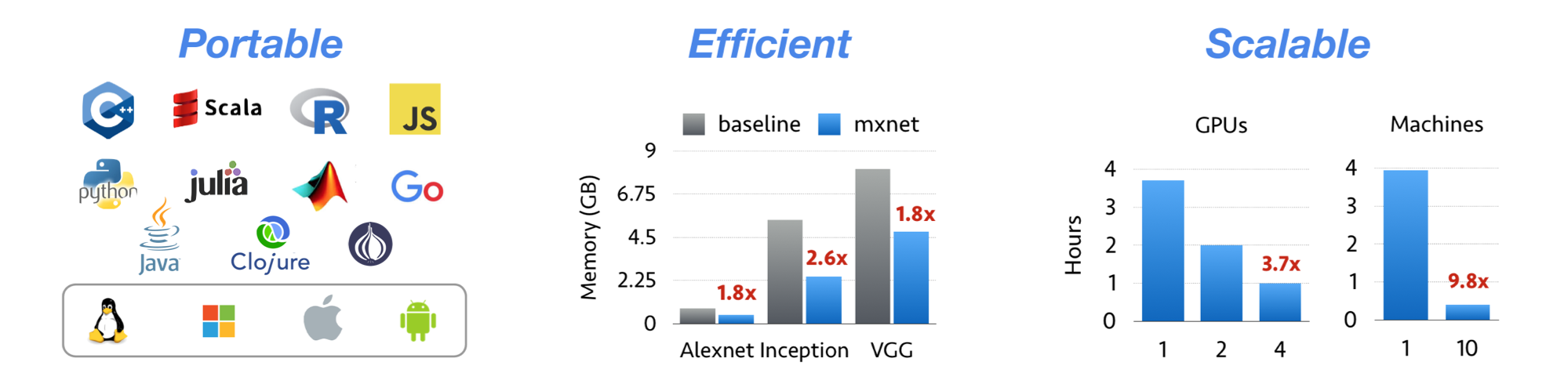

Apache MXNet (incubating) is a deep learning framework designed for both efficiency and flexibility. It allows you to mix symbolic and imperative programming to maximize efficiency and productivity. At its core, MXNet contains a dynamic dependency scheduler that automatically parallelizes both symbolic and imperative operations on the fly. A graph optimization layer on top of that makes symbolic execution fast and memory efficient. MXNet is portable and lightweight, scaling effectively to multiple GPUs and multiple machines.

MXNet is also more than a deep learning project. It is also a collection of blue prints and guidelines for building deep learning systems, and interesting insights of DL systems for hackers.

Installation Guide

Install Dependencies to build mxnet for HIP/ROCm

Install Dependencies to build mxnet for HIP/CUDA

-

Install CUDA following the NVIDIA’s installation guide to setup MXNet with GPU support

-

Make sure to add CUDA install path to LD_LIBRARY_PATH

-

Example - export LD_LIBRARY_PATH=/usr/local/cuda/lib64/:$LD_LIBRARY_PATH

Build the MXNet library

-

Step 1: Install build tools.

sudo apt-get update sudo apt-get install -y build-essential -

Step 2: Install OpenBLAS. MXNet uses BLAS and LAPACK libraries for accelerated numerical computations on CPU machine. There are several flavors of BLAS/LAPACK libraries - OpenBLAS, ATLAS and MKL. In this step we install OpenBLAS. You can choose to install ATLAS or MKL.

sudo apt-get install -y libopenblas-dev liblapack-dev libomp-dev libatlas-dev libatlas-base-dev

- Step 3: Install OpenCV. Install OpenCV here. MXNet uses OpenCV for efficient image loading and augmentation operations.

sudo apt-get install -y libopencv-dev

- Step 4: Download MXNet sources and build MXNet core shared library.

git clone --recursive https://github.com/ROCmSoftwarePlatform/mxnet.git

cd mxnet

export PATH=/opt/rocm/bin:$PATH

- Step 5: To compile on HCC PLATFORM(HIP/ROCm):

export HIP_PLATFORM=hcc

To compile on NVCC PLATFORM(HIP/CUDA):

export HIP_PLATFORM=nvcc

-

Step 6: To enable MIOpen for higher acceleration :

USE_CUDNN=1 -

Step 7:

If building on CPU:

make -jn(n=number of cores) USE_GPU=0 (For Ubuntu 16.04)

make -jn(n=number of cores) CXX=g++-6 USE_GPU=0 (For Ubuntu 18.04)

If building on GPU:

make -jn(n=number of cores) USE_GPU=1 (For Ubuntu 16.04)

make -jn(n=number of cores) CXX=g++-6 USE_GPU=1 (For Ubuntu 18.04)

On succesfull compilation a library called libmxnet.so is created in mxnet/lib path.

NOTE: USE_CUDA, USE_CUDNN flags can be changed in make/config.mk.

To compile on HIP/CUDA make sure to set USE_CUDA_PATH to right CUDA installation path in make/config.mk. In most cases it is - /usr/local/cuda.

Install the MXNet Python binding

- Step 1: Install prerequisites - python, setup-tools, python-pip and numpy.

sudo apt-get install -y python-dev python-setuptools python-numpy python-pip python-scipy

sudo apt-get install python-tk

sudo apt install -y fftw3 fftw3-dev pkg-config

- Step 2: Install the MXNet Python binding.

cd python

sudo python setup.py install

- Step 3: Execute sample example

cd example/

cd bayesian-methods/

To run on gpu change mx.cpu() to mx.gpu() in python script (Example- bdk_demo.py)

$ python bdk_demo.py

Ask Questions

- Please use mxnet/issues for reporting bugs.

What's New

- [Version 1.4.0 Release](to be created - https://github.com/apache/incubator-mxnet/releases/tag/1.4.0) - MXNet 1.4.0 Release.

Contents

Features

- Design notes providing useful insights that can re-used by other DL projects

- Flexible configuration for arbitrary computation graph

- Mix and match imperative and symbolic programming to maximize flexibility and efficiency

- Lightweight, memory efficient and portable to smart devices

- Scales up to multi GPUs and distributed setting with auto parallelism

- Support for Python, R, Scala, C++ and Julia

- Cloud-friendly and directly compatible with S3, HDFS, and Azure

License

Licensed under an Apache-2.0 license.

Reference Paper

Tianqi Chen, Mu Li, Yutian Li, Min Lin, Naiyan Wang, Minjie Wang, Tianjun Xiao, Bing Xu, Chiyuan Zhang, and Zheng Zhang. MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems. In Neural Information Processing Systems, Workshop on Machine Learning Systems, 2015

History

MXNet emerged from a collaboration by the authors of cxxnet, minerva, and purine2. The project reflects what we have learned from the past projects. MXNet combines aspects of each of these projects to achieve flexibility, speed, and memory efficiency.